Tahereh Zarrat Ehsan

Artificial Intelligence Enthusiast and Researcher

Biography

I have earned my M.Sc. and B.Sc. degree in Electrical Engineering from the University of Guilan, with a primary research focus on computer vision, machine learning, and deep learning. My thesis delved into violence detection in video sequences through the application of image and video processing techniques.

I am enthusiastic about exploring opportunities for research collaboration in these areas. If you are interested, please feel free to contact me at zarrat.ehsan [AT] gmail.com.

Download my resumé.

- Machine Learning

- Unsupervised, and Probabilistic Deep Learning

- Image and Video Processing

- Generative Modeling

- Representation Learning

- Generalizability of Deep Learning

- Anomaly Detection

- Violence Detection

- Breast Cancer Detection

-

M.Sc. in Electrical Engineering, 2019

University of Guilan

-

BSc in Electrical Engineering, 2014

University of Guilan

Skills

Experience

Research of violence detection techniques:

- deep learning methods such as Transformer, Attention, GNN, and AE using customized loss function and maximum likelihood principle

- Probabilistic deep learning, unsupervised learning, and generative modeling such as VAE, GAN, pix2pix, Cycle GAN, Normalizing flows

- Published 2 IEEE international Conference papers at MVIP and IKT

- Published 2 journal papers at multimedia tools and application (Q1) and visual computer (Q2) journals

- Published a book chapter at IET journal

- Submitted a paper at arXiv

Research and python implementation of:

- Various image processing techniques for abnormal human behavior recognition such as object detection, motion estimation, segmentation, feature extraction, feature matching, and optimization

- Published 1 IEEE international Conference papers at ICCKE

Accomplishments

Featured Publications

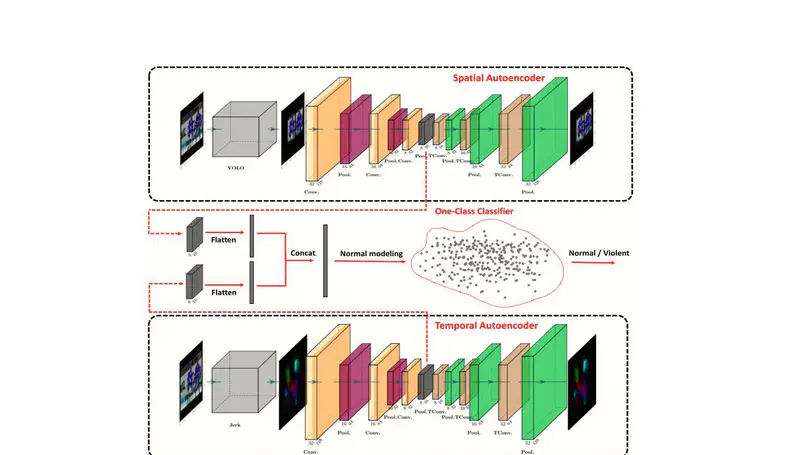

Numerous violent actions occur in the world every day, affecting victims mentally and physically. To reduce violence rates in society, an automatic system may be required to analyze human activities quickly and detect violent actions accurately. Violence detection is a complex machine vision problem involving insufficient violent datasets and wide variation in activities and environments. In this paper, an unsupervised framework is presented to discriminate between normal and violent actions overcoming these challenges. This is accomplished by analyzing the latent space of a double-stream convolutional AutoEncoder (AE). In the proposed framework, the input samples are processed to extract discriminative spatial and temporal information. A human detection approach is applied in the spatial stream to remove background environment and other noisy information from video segments. Since motion patterns in violent actions are entirely different from normal actions, movement information is processed with a novel Jerk feature in the temporal stream. This feature describes the long-term motion acceleration and is composed of 7 consecutive frames. Moreover, the classification stage is carried out with a one-class classifier using the latent space of AEs to identify the outliers as violent samples. Extensive experiments on Hockey and Movies datasets showed that the proposed framework surpassed the previous works in terms of accuracy and generality.

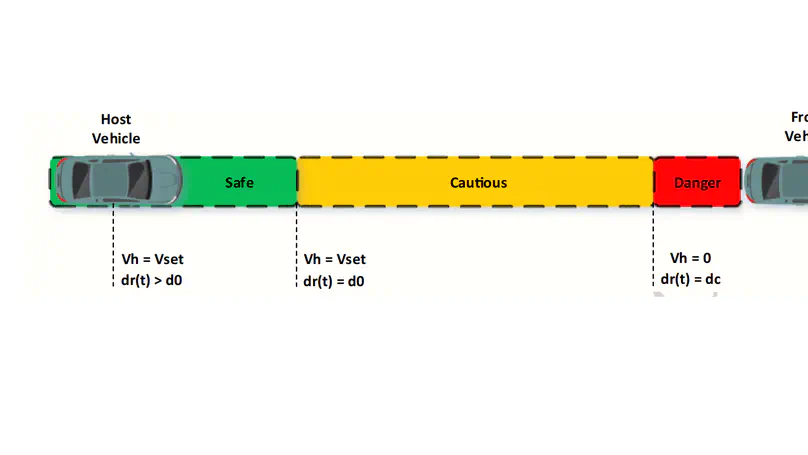

Comfort in Autonomous Vehicles (AVs) is a decisive aspect and plays an essential role in their advanced driving systems. As the comfort is directly influenced by the amount of acceleration and deceleration, a smooth longitudinal driving strategy can significantly improve the passenger’s acceptance level. Although some safe longitudinal strategies such as time-headway are introduced for AVs, the breakpoints in their speed generation models when approaching the front vehicle made discomfort behavior. In this paper, we proposed a continuous and differentiable reference speed model with a single equation to cover all possible relative distances. This model is constructed based on the well-known attributes of a hyperbolic tangent curve to smoothly change the speed of the host vehicle at the corner points. Moreover, the adjustable variables in our reference speed generator make it possible to choose between low and high-accelerate driving strategies. The experiments are performed based on several driving scenarios such as stop-and-go, hard-stop, and normal driving, and the results are compared with different reference speed models. The maximum improvement is obtained in the stop-and-go scenario, and on average, about 7.29 and 12.47% are achieved in terms of the magnitude of acceleration and jerk, respectively.

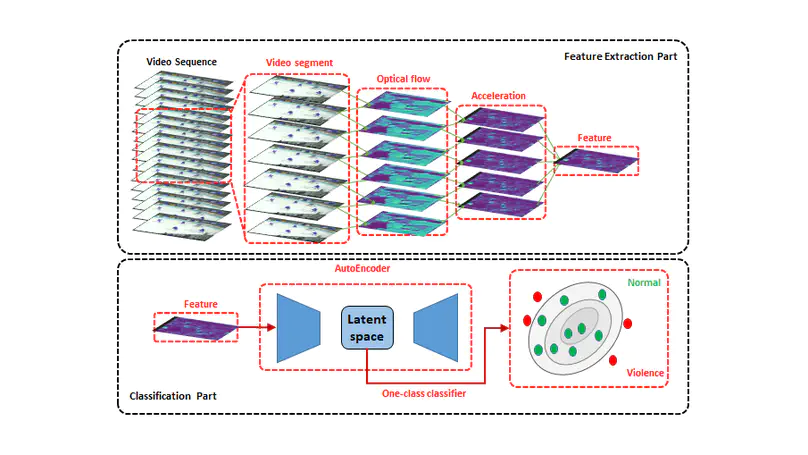

Violent crime is one of the main reasons for death and mental disorder among adults worldwide. It increases the emotional distress of families and communities, such as depression, anxiety, and post-traumatic stress disorder. Automatic violence detection in surveillance cameras is an important research area to prevent physical and mental harm. Previous human behavior classifiers are based on learning both normal and violent patterns to categorize new unknown samples. There are few large datasets with various violent actions, so they could not provide sufficient generality in unseen situations. This paper introduces a novel unsupervised network based on motion acceleration patterns to derive and abstract discriminative features from input samples. This network is constructed from an AutoEncoder architecture, and it is required only to use normal samples in the training phase. The classification has been performed using a one-class classifier to specify violent and normal actions. Obtained results on Hockey and Movie datasets showed that the proposed network achieved outstanding accuracy and generality compared to the state-of-the-art violence detection methods.

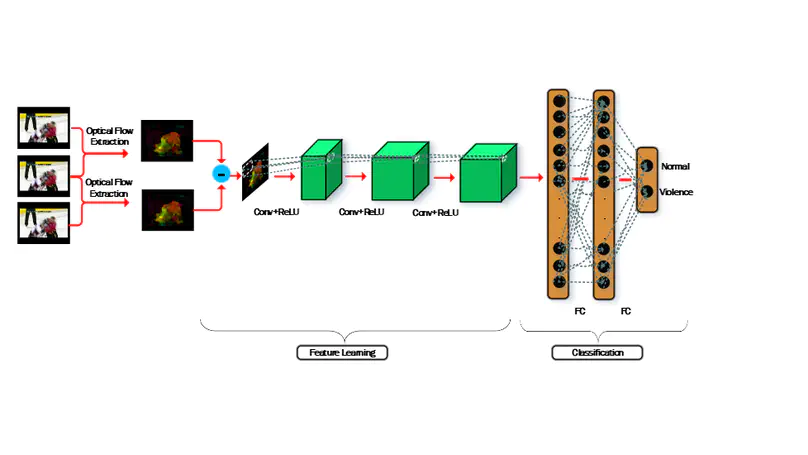

Video surveillance cameras are widely used due to security concerns. Analyzing these large amounts of videos by a human operator is a difficult and time-consuming job. To overcome this problem, automatic violence detection in video sequences has become an active research area of computer vision in recent years. Early methods focused on hand-engineering approaches to construct hand-crafted features, but they are not discriminative enough for complex actions like violence. To extract complex behavioral features automatically, it is required to apply deep networks. In this paper, we proposed a novel Vi-Net architecture based on the deep Convolutional Neural Network (CNN) to detect actions with abnormal velocity. Motion patterns of targets in the video are estimated by optical flow vectors to train the Vi-Net network. As violent behavior comprises fast movements, these vectors are useful for the extraction of distinctive features. We performed several experiments on Hockey, Crowd, and Movies datasets and results showed that the proposed architecture achieved higher accuracy in comparison with the state-of-the-art methods.

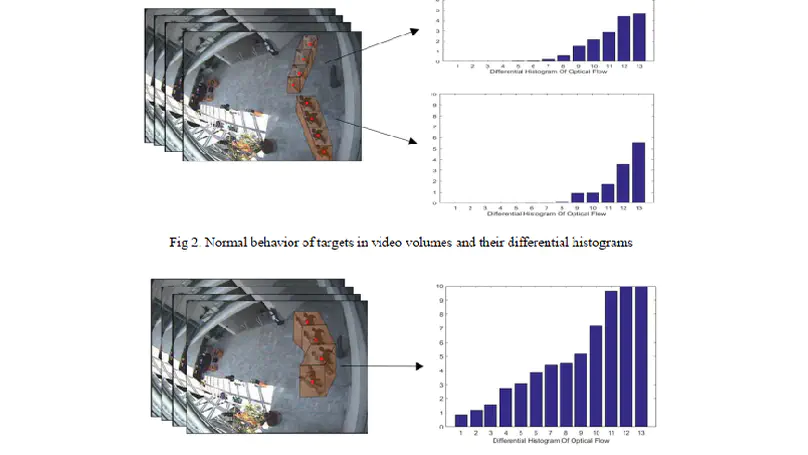

Intelligent surveillance systems and automatic detection of abnormal behaviors have become a major problem in recent years due to increased security concerns. Violence behaviors have a vast diversity so that distinction between them is the most challenging problem in video-surveillance systems. In recent works, introducing unique and discriminative feature for representing violence behaviors is needed strongly. In this paper, a novel violence detection method has been proposed which is based on combination of motion trajectory and spatio-temporal features. A dense sampling has been carried out on spatiotemporal volumes along target’s path to extract Differential Histogram of Optical Flow (DHOF) and standard deviation of motion trajectory features. These novel features were employed to train a Support Vector Machine (SVM) to classify video volumes into two normal and violence categories. Experimental results demonstrate that the proposed method outperforms other state-of-the-art violence detection methods and achieves 91 % accuracy for detection result.